TierTrain: Proactive Memory Tiering for CPU-Based DNN Training

Introduction

DNN training on CPUs – a memory perspective.

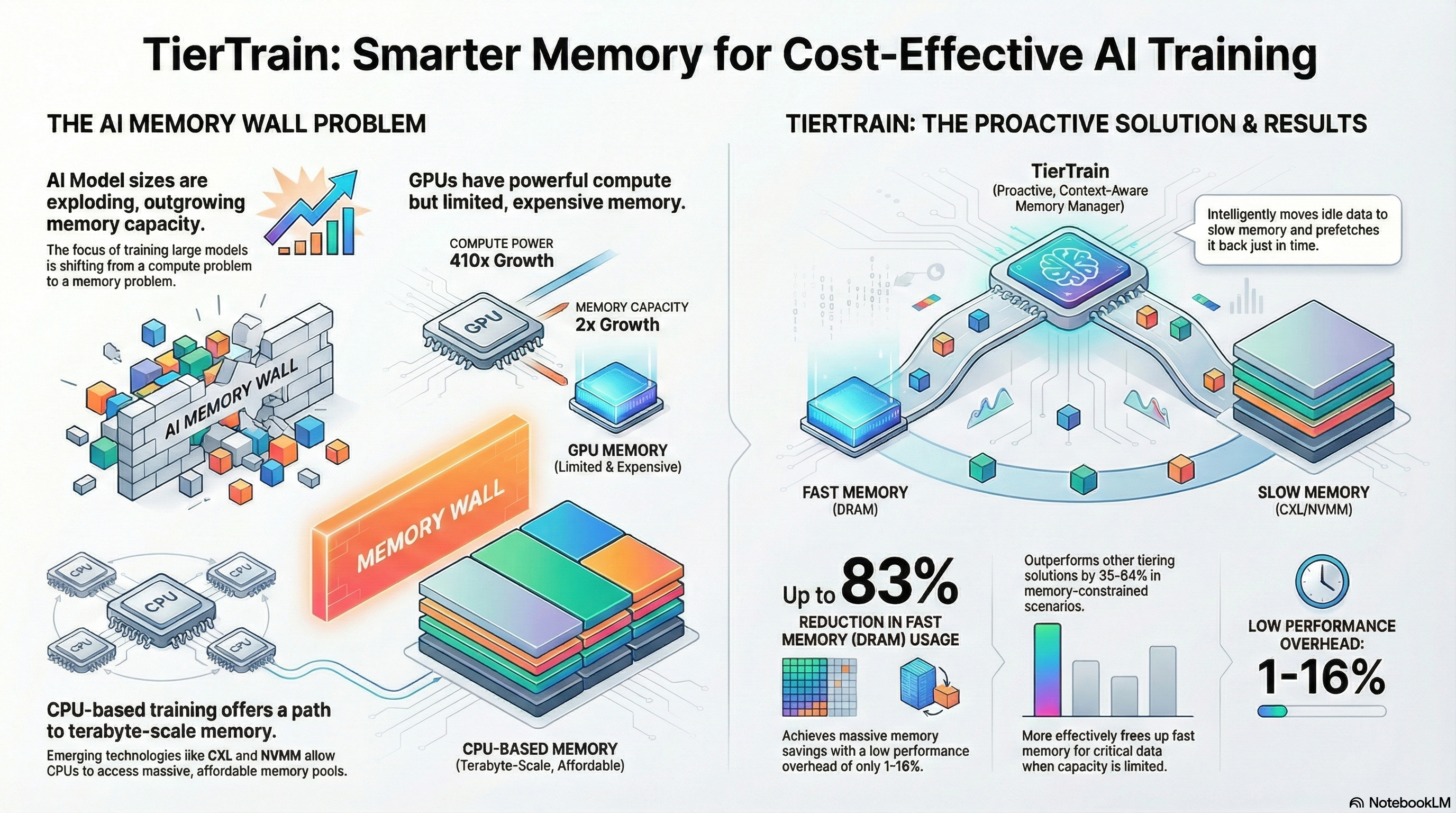

Training modern deep neural networks is no longer just a compute problem—it is fundamentally a memory problem. As DNN models grow deeper and wider, their memory footprint has reached hundreds of gigabytes and, in some cases, terabytes. While GPUs offer impressive compute throughput, their limited and expensive on-device memory increasingly becomes the bottleneck, pushing up training costs and limiting accessibility. In this context, TierTrain explores a different and timely direction: cost-effective DNN training on CPUs using tiered memory systems

Modern CPUs and Memory

Modern CPUs are no longer “just general-purpose processors.” With advances such as:

- Large, byte-addressable memory capacity (scaling to terabytes),

- Emerging memory technologies like CXL-attached memory and NVMM,

- Specialized CPU instructions for matrix operations (e.g., AMX), CPU-based DNN training has become increasingly viable. However, simply adding more memory is not enough—accessing slow memory naively can destroy performance. This is where memory tiering comes in: combining fast, expensive memory (DRAM/HBM) with slower, cheaper memory (Optane, CXL), and intelligently deciding what data lives where – Memory Tiering.

Insight

Most existing memory tiering systems are reactive. They monitor page access patterns over time, collect telemetry, and then migrate data based on “hot” and “cold” pages. This approach has two major drawbacks:

- It reacts too late.

- Telemetry itself adds overhead.

TierTrain is built on a crucial observation:

CPU-based DNN training has a periodic and deterministic memory access pattern

During training:

- Forward pass generates “saved tensors.”

- These tensors remain idle for a long time.

- They are accessed again only during the backward pass, in reverse order.

This predictable idle window creates a powerful opportunity for proactive memory management.

TierTrain

TierTrain is a context-aware, proactive memory tiering system designed specifically for CPU-based DNN training. Instead of guessing which pages are hot or cold, TierTrain:

- Hooks directly into the training framework (PyTorch),

- Understands which tensor belongs to which layer,

- Knows when a tensor will be needed again.

- Using this information, TierTrain:

Aggressively evicts idle tensors to slow memory immediately after a layer’s forward pass. - Prefetches tensors back just in time for their backward pass.

- Uses a queuing-based model to ensure migrations happen on time without overloading the system

Crucially, tensor migration happens off the critical path, avoiding the performance pitfalls of earlier DNN-specific tiering approaches.

Conclusion

TierTrain is a proactive, framework-aware memory tiering system that enables efficient DNN training on CPUs by exploiting the predictable lifetimes of tensors during training. By evicting idle tensors to slower, cheaper memory after the forward pass and prefetching them just in time for the backward pass, TierTrain significantly reduces DRAM usage (up to ~80%) with minimal performance overhead. This approach outperforms reactive, telemetry-based tiering and shows that intelligent memory management can make large-model training on CPU-based, tiered-memory systems both practical and cost-effective